Chapter 5 The validity of measurement

The previous sections on the reliability of measurement were concerned with the issue of consistency of measurement, and addressed such questions as how broad is the construct we wish to measure and how many items are needed to give reliable measurement of the construct. Answering these questions involved reliability analysis. Such analysis gives test constructors and test users some confidence that the test is giving a dependable measure with little error in measurement. Thus, meaningful measurement is a possibility. However, there is still a question of whether the test is any good for its intended use, that is, whether the test is measuring something appropriate, meaningful and useful. Many textbooks describe this as establishing whether the test actually measures what it claims to measure and this is termed the validity of measurement. Traditionally, issues of validity are considered under three categories of evidence:

- Content validity

- Criterion related validity

- Construct validity

A distinction needs to be drawn between these categories of evidence and what the test merely appears to be for test users and test takers. The extent to which the test appears to be measuring what it claims to measure, without reference to any evidence from outside of the test itself, can be described as face validity. Thus, the instructions for the test, the practice items and main test content may all appear to be relevant to the construct the test claims to measure. This impression can sometimes be misleading, but it may be useful for the test candidate to see the apparent relevance of the test.

In contrast to face validity, the three categories of validity evidence link the test to sources of evidence outside of the test itself.

5.0.1 Content validity

This is the extent to which the test content accurately reflects the content domain of the construct. This is easier to achieve for tests of achievement rather than tests of more abstract constructs such as spatial reasoning. Nevertheless, a test of spatial ability, for example, should probably include items involving the folding, rotation and comparison of complex visual images. Beyond this face validity, the test user should consult ‘expert sources’ to verify the content validity of the test e.g. the relevant academic literature, existing tests and people working in relevant occupations.

5.0.3 Construct validity

This is the extent to which the test has accumulated evidence illustrating the nature of its theoretical construct. This is an ongoing process, drawing on many interrelationships, which increasingly illuminates the extent to which the test may be said to measure a construct. As such, all forms of validity evidence mentioned above are relevant to construct validation, but it is broader than these procedures alone. A number of sources of evidence can contribute to construct validation, including:

5.0.3.1 The test’s correlation with other tests.

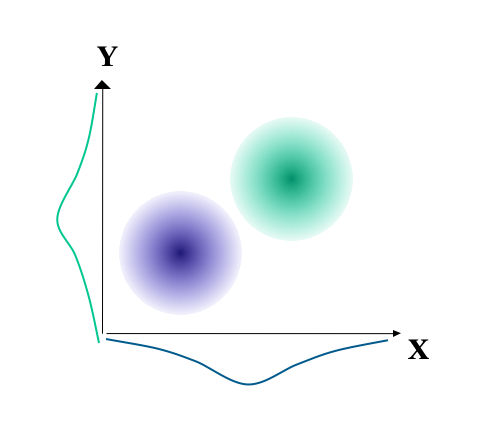

For example, scores from a test of spatial ability should correlate with other established tests that claim to measure a similar construct of spatial ability. However, the correlations such not be too high (i.e. about 0.6 rather than 0.9), since too high a correlation means that the tests duplicate each other. Of course, correlating a test with other established tests is only of use if the established tests have been shown to relate to observable behaviour outside of the tests. Furthermore, this test should not correlate very well with other tests that claim to measure quite different constructs. For example, a test of spatial ability should not correlate highly with knowledge of word meanings or mathematical ability.

5.0.3.2 Meaningful group differences.

For example, a test of spatial ability should give significant differences in the average scores between such groups as, on the one hand, architects and template designers, compared with groups that are not considered to require spatial ability.

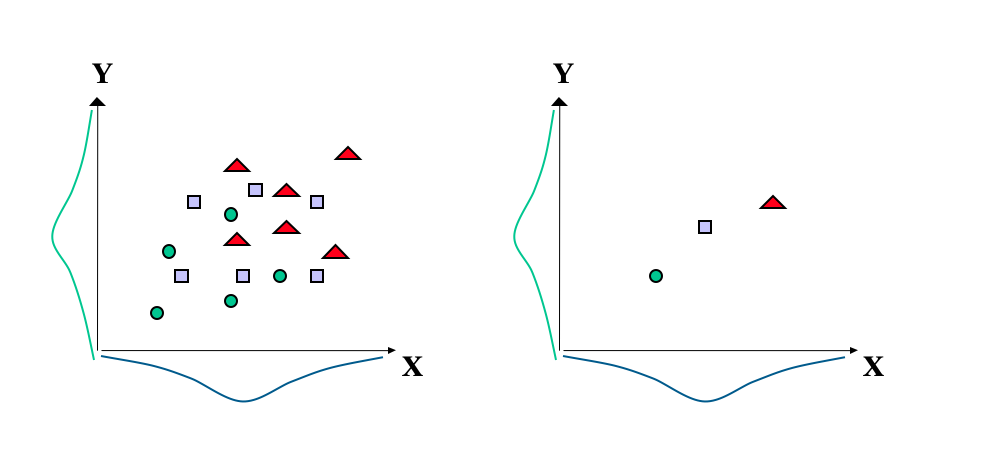

5.0.3.3 Intervention or experimental effects.

For example, interventions such as visualisation training programmes or practical block building activities may be expected to improve scores on a test of spatial ability. Such interventions may take the form of an experiment, where, for example, one group receives the intervention and a comparison group does not.

The above are sources of evidence that support particular inferences made about the test scores. Validity then, really about establishing whether there is support for the inferences made about the test scores. There should be as many validity studies as there are inferences.

5.1 The relationship between reliability and validity:

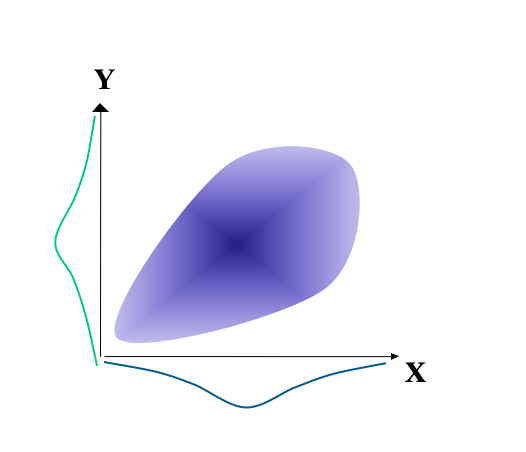

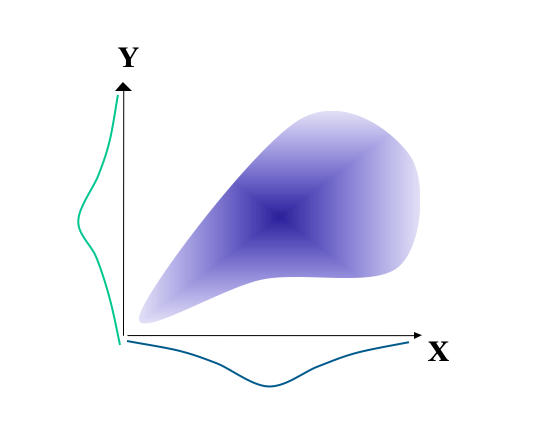

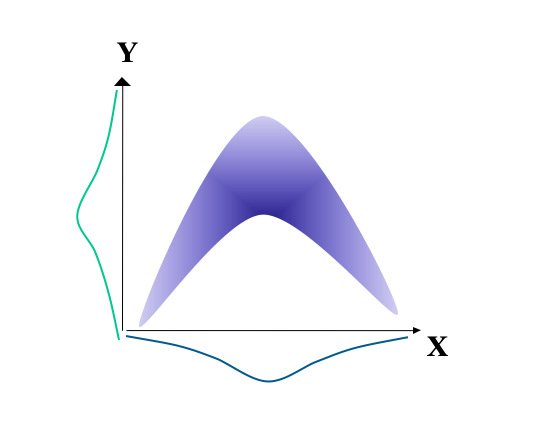

The maximum validity coefficient between a test and criterion is equal to the square root of the two reliabilities multiplied together:

$ validity_{max} = $

A test cannot be valid if it is not reliable. However, a reliable test is not necessarily valid. Even if we have a test with little influence from error, what is being measured may not be meaningful and useful.

Roll Credits↩︎